Artificial intelligence gives rise to apprehension in people, both senses of that word – understanding, and fear. People conjure up images of an android – a humanized robot, possibly run amok – that is able to store and regurgitate information, though vastly faster and more accurately than mere humans, and integrate, decide and, in some instances, worryingly, act on its decisions. Often the idea of a ‘droid in fiction and film is presented as an asexual, non-emotional, fully rational entity but without personality, a human-like machine. On the other hand, it could be hard to distinguish an android from an actual conscious human being. But are these apprehensions correct?

AI: Something to learn? Or something to fear?

For the most part though, today when we say Artificial Intelligence – AI (or perhaps more accurately, AGI, Artificial General Intelligence) – we are talking not about robots or androids (yet, but coming fast) but vast arrays of on-line internet connected computers processing large language methods software (llms) responding to questions from users, (presumably human), providing thorough, articulate, written or even verbal, answers. These computer programs may be intelligent but are not mobile, do not have articulated arms nor opposable fingers and thumbs, so in such state have no capacity to cause direct physical harm. Probably. Anyway…

The worries we may have of smart robots may be a topic for another post but a vast array of computers of uncertain location is scary enough for many people. And I get it. It took me quite a few hours and days to write this, and the previous blog post, and you will have to work hard for at least 20 minutes to read it and make what you can of it, or just trash it. But if you are a computer program reading this you don’t care and you won’t trash it – it only takes you a mere second to read it, and record it in memory, ready to extract the essence for some other purpose at some other time.

It is thoroughly evident today that AI machines that simulate human intelligence using heuristic programming methods fully meets Allan Turing’s test of intelligence. In many ways modern AI devices exceed by an extraordinary margin, the standard of human capacity to carry on a conversation with a human interlocutor, receive instructions, search and process information and provide a coherent written and oral report. And if a picture is worth a thousand words, it can draw too.

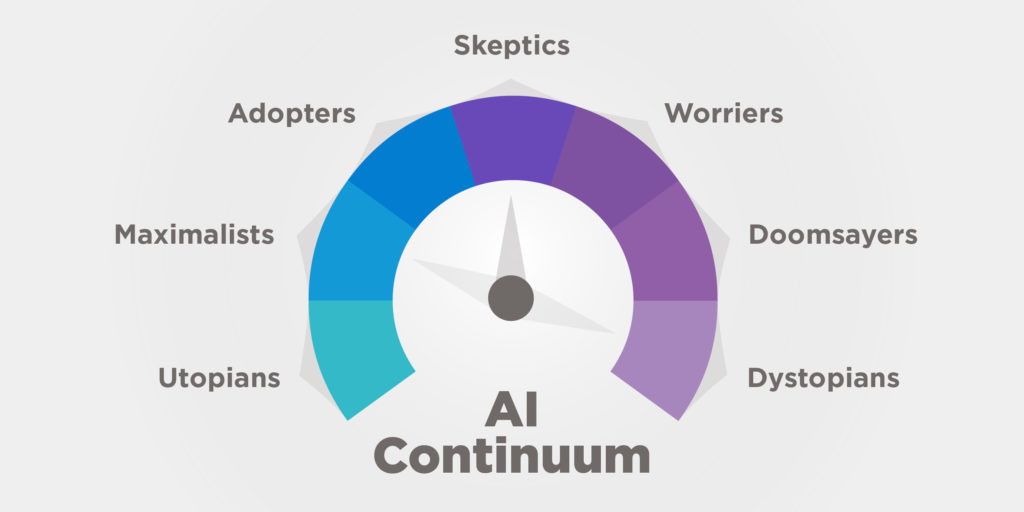

People are not uniformly apprehensive, one way or another, of AI. In fact, we might even hazard that there is an array of attitudes people hold, from committed positive belief to abject terror.

Psychologically speaking, I’m inclined to skepticism mixed with a bit of pragmatisms (late adopter?) and a bit of hopefulness. Okay, confused, nervously curious.

AI Utopians – AI Utopians think AI devices, including the most advanced humanoids, will bring a perfect society to mankind. All the menial work will be eliminated – any job a machine can do should be done by a machine, freeing people from drudgery of any kind. Every human will have a robot as a personal servant; class structure will be eliminated, there will be no power and politics; people will be free from want and free to do whatever they want. A time of perfect harmony, idleness and leisure. But what exactly would people do with all this free time?

AI Maximalists – Maximalists are all-in with the new technology. They see only positives in the new technology. They may not think of themselves as Utopians but they certainly are, effectively, enabling that future state of society. They see little difference in machine learning devices doing repetitive tasks that humans once did, and droids doing advanced intricate maneuvers; and we’re not talking here about assembly-line automated tools, or vacuum cleaners, we’re talking dentists and surgeons.

AI Adopters – are moderate optimists. They’re not fearful of the future of AI (but respectful of the possible limitations) and are willing to give some applications a try if it is shown to be painless to learn and useful to adopt. Adopters welcomed Siri and Alexis into their lives and are willing to have Grammarly or ChatGPT edit and rewrite their proposal letter to their potential client. (They may not yet be ready to have their Chatbot write a love letter for them to their girlfriends; the girlfriends may, however, be happy to have Microsoft Co-Pilot write their Dear John letters.)

AI Skeptics – These types see AI promises as exaggerated hype. They don’t doubt that the powerful computer servers and sophisticated language processors do a good job of reporting their findings to the user’s questions but see them as simply elaborate search engine devices. They also question the appropriateness of the label – even if they pass the Turing test for mimicking intelligence, AI machines are not intelligent in the human consciousness sense of the word. They may be good at gathering information and regurgitating it but can they distinguish fact from fiction: Would the AI processor’s algorithm be able to distinguish between widely reported misinformation from truth; and would it question its own findings? Skeptics are open to future AI developments but not unquestioningly; they are willing to learn from AI Adopters, and are doubtful of extremists’ misapprehensions, but reject the wilder claims for these technological advances. They wonder about the ethical implications of AI misuse.

AI Worriers – The Worriers do worry about the future implications of AI but are willing to wring their hands and let things run their course. Afterall, humans have worried about technological advances for centuries – the steam engine; trains, planes and automobiles; electric communications devices – telegraph, telephone, television (teleprompters? tele-transporters?); computers – mainframe (HAL?), midi, mini and personal, handheld; the internet, Wikipedia, social media. (Now that’s something to worry about.) AI Worriers do worry about the ethical societal implications about AI. And they worry that the AI Maximalists don’t worry enough.

AI Doomsayers – Doomsayers are the Luddites of the modern age. Whatever AI may bring to current social and societal norms it’s not going to be good. Thousands and millions of people will be thrown out of work. Social upheaval and conflict will increase, exponentially, and still no solution for climate change.

AI Dystopians – Where the Doomsayers are fearful the Dystopians see the future of AI as completely nightmarish, believing that the fears of the Doomsayers will actually come true. They are the exact opposite of the Utopians. Not only will intelligent robots take over all functions in society, humanity will be completely redundant and subservient to the mother-computer: Humans become drones in service to the Borg collective. Resistance is futile.

Regardless of where you stand on this spectrum there is little doubt there are societal, human and ethical implications for the application of this fast-emerging technology, or should be.

Regardless of your personal orientation to the present and future AI world, large philosophical questions arise: if an intelligent machine is equivalent to human intelligence: what is appropriate to delegate to a machine? If a robot runs amok, who do you sue? If an AGI device writes a best-selling novel, who collects the royalties? Is it ethical to allow students to have AI programs write their thesis papers? Or for consultants/ lawyers/ interior designers to offer AI advice to clients and charge substantial fees for that? what implications may AI have for human society? how much space will these vast server farms and their power generators take up? will there be any room for golf courses?

And most importantly, will authors lose all hope of generating and protecting their intellectual property? Ah, there’s the rub, which we will examine in the next post (or should we say, the last post?)

Doug Jordan, reporting to you from Kanata

© Douglas Jordan & AFS Publishing. All rights reserved. No part of these blogs and newsletters may be reproduced without the express permission of the author and/or the publisher, except upon payment of a small royalty, 5¢.